Using Pytest for Everything

—

Pytest is a testing framework suitable for testing all kinds of applications. It’s written in Python, but my experience is that it works great for testing programs in any language. Of course it won’t replace unit tests, but for integration testing it’s been doing great.

Integration Testing

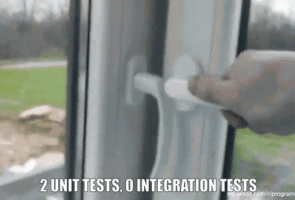

Why do we need another level of tests when we already have unit tests which cover 100% of our code? I’d argue that integration tests which actually run your end product are even more important than unit tests.

There are things which are easier to test on one level or another. Functions which parse strings are great examples where unit tests shine. They usually accept a string and produce some kind of list of tokens or abstract syntax tree. Their interface doesn’t change very frequently so it’s easy to add new strings to the list and see how our parser behaves. If bug occurs, we usually don’t change the interface, but implementation, so we’re not wasting our time adapting the test suite as well.

Integration tests have similar properties, but in scope of our program. With applications which run on a particular OS we’re limited to the communication interfaces it gives us. Typically we communiate with our programs by either passing them a list of arguments, standard input or through a socket (network or unix), and we listen for its responses on standard output/error or by listening on a socket.

Of course protocols used by programs can be very complex. I’m not proposing to write parsers for raw responses. If our program writes to the database, it’ll be better to create a test database and read it. If it speaks in language of binary protocol, we must decode its messages. Fortunately, there are Python libraries for the most popular protocols and in worst case, we can use stdlib’s struct module.

The biggest reason form me for writing integration tests is that I trust my applications which have them. Applications are more than a sum of their parts. Even well-tested units might misbehave when they are tied together. With integration tests we can replicate real-life scenarios in how they will be used. We can stress-test and test against data loss scenarios. In software trust is everything.

I will focus on ordinary command line applications, but keep in mind that Pytest can be used to test any kind of applications, but you’ll likely have to use additional libraries. For example:

- to test of graphical user interfaces there’s pyautogui, which implements awesome locate functions for navigating graphical elements; there are specialized framework-specific libraries as well;

- for testing applications which write things to databases, you’ll have to

create this database in the tests and access it from the test, e.g. with a

built-in

sqlite3module; - for testing network calls you can prepare a HTTP endpoint with built-int

http.server.Base. I did it for GWS, so take a look for an inspiration.

Why Pytest?

I chose Pytest as a framework for integration testing because it just works and gives us the power of vast Python ecosystem. It’s also very easy to immediately dive in (and I hope that this article will make the jump even easier).

Now, let’s see what batteries are included in Pytest.

Fixtures

The rule of thumb is that we should run each test separately from all the other ones. In other words, we don’t want tests to affect each other. Pytest creates and destroys a separate context for each test automatically. It implements this idea with fixtures.

Fixture is a code called before the actual test. Usually it is to prepare a context for that particular test, but in practice fixtures can do anything: they prepare a temporary file system for our application, they initialize databases, set up HTTP server, prepare helper functions. The list just goes on and on.

How do we use them? First, we need a function and then we need to

decorate it with a @pytest.fixture decorator. To use such fixture

in any test function or in other fixtures, we name one of the parameters with

its name and Pytest will automatically execute it and pass its return value

to this parameter.

@pytest.fixture

def db():

return Database()

@pytest.fixture

def user(db):

u = "Doug"

db.add_user(u)

return u

def test_database_access(db, user):

assert db.has_user(user)

Pytest makes sure that each fixture is evaluated only once during the test

execution, but we can change this behaviour with scope parameter. For

example @pytest.fixture(scope="session") creates a fixture which evaluates

only once during the whole test session. It’s particularily useful for

resource-heavy fixtures.

One note: keep in mind that there are other things which can leak into our test environment and affect the results. We must deal with some nasty global states, like the file system and environment variables. Read on.

Monkeypatching

Pytest has built-in support for monkeypatching. It is a way of

mocking (or changing/patching) some global state in a reliable, reversable

way (it reverses global state to its original form when monkeypatch fixture

is tear down). In integration test suite we will use it to change environment variables

for our commands, to temporarily change the current working directory (CWD)

or to maintain some dictionaries from which the configuration for our

programs is generated.

Clean Temporary Directories

Pytest automatically creates a separate temporary directory for each test. We can use them to set up a safe, empty space for our applications. Pytest automatically rotates these temporary directories, so only directories from the latest runs are kept. This way you don’t need to worry about clobbering your hard drive or filling up your RAM1.

Python

Last but not least, we have whole Python ecosystem available. There are som many high quality libraries which are designed to help you in almost any task. For example, there’s sh library designed to be subprocess replacement, which might simplify the code for running commands. For all your HTTP needs there’s a wide-spread requests library which is more user-friendly than a built-in urllib, and so on.

Running the Tests

Preparing the Environment

Let’s start at the very beginning. A very good place to start.

Pretend that we’ll use Pytest to test a simple C application. After following all the steps in this section we’ll end up with a project directory which looks like this:

.

+-- .testenv/

+-- test/

+-- conftest.py

+-- test_prog.py

+-- check

+-- main.c

+-- Makefile

+-- test-requirements.txt

The code of our application is in main.c. We’re going to build and install it with ordinary Make.

We’ll install pytest in a separate virtualenv which we’ll create in a hidden .testenv directory. Virtualenvs are Python-specific lightweight environments isolated from the system installation of Python. We can add and remove Python packages to them. They’re particularily useful when developin Python applications, but we’ll use one to simply install Pytest and any other Python packages. We’ll keep a list of them in test-requirements.txt file. We’ll start really simple, with only two libraries:

# test-requirements.txt

pytest==7.2.0

sh==1.14.2

pytest is self-explaining, but what’s sh? It’s a replacement for a

built-in subprocess library which we use to call external commands. It

allows running programs like they were ordinary functions. For example, we

can run Make by calling sh.make(opts). It also has some other features

which are handy for testing external commands.

To track changes in test-requirements.txt and automatically rebuild

virtualenv, we’ll use a Makefile below. Not only it compiles and installs our

C program, but it also manages the virtualenv. Because .testenv isn’t a

.PHONY target, but a directory which exists on a filesystem, it will be

rebuilt every time test-requirements.txt becomes fresher than it.

# Makefile

CC ?= gcc

PREFIX ?= /usr/local

bindir = $(PREFIX)/bin

build: prog

install: build

install -Dm 755 prog "$(DESTDIR)$(bindir)"

prog: main.c

$(CC) "$<" -o "$@"

.testenv: test-requirements.txt

rm -rf "$@"

python3 -m venv $@

$@/bin/pip3 install -r $<

.PHONY: build install

We don’t use Make to run the tests. That’s because Make doesn’t have a nice

way of passing arguments to pytest. That’s why we have a separate check

script, which invokes build steps of both our program and virtualenv just

before it runs any tests. It is crucial to get dependencies in Makefile

right to not rebuild project or program all the time we run the check

script.

#!/bin/bash

set -o errexit

set -o nounset

set -o pipefail

DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null 2>&1 && pwd )"

cd "${DIR}"

make build .testenv

.testenv/bin/pytest "$@"

When we run it without any arguments, via ./check, Pytest will

automatically discover and run all the tests, ut we can pass it any Pytest

flag to e.g. narrow down the list of tests.

Installing the program

Before we run the program we should install it. We could run it from our project directory, but that’s savage. We’re doing integration tests after all. Installation and running of the program is part of its lifetime. Also, installed programs often behave behave differently. For example, they may have a different $0, or use libraries from different paths. By installing it outside the workspace we make sure that no random state of our workspace affects the tests.

We can use install target in the Makefile to install the program to a

temporary directory created by Pytest. Fortunately, it supports a

DESTDIR which is a crucial part of program installation. We

want to install the program for each run of the test suite – I don’t think

that installing it separately for each test has huge benefits.

Let’s create the first Pytest fixture which we add to test/conftest.py file. conftest.py is a file which is automatically loaded by Pytest. All fixtures defined in it are available to all the tests, without the need of explicit importing4.

import os

from pathlib import Path

import pytest

import sh

CURR_DIR = os.path.dirname(os.path.abspath(__file__))

SRC_ROOT = os.path.dirname(CURR_DIR)

@pytest.fixture(scope="session")

def install(tmp_path_factory):

destdir = tmp_path_factory.mktemp("installation")

prefix = Path("/usr")

env = os.environ.copy()

env["DESTDIR"] = str(destdir)

env["PREFIX"] = str(prefix)

sh.make("-C", SRC_ROOT, "install", _env=env)

return destdir, prefix

First, we must determine where we are and instruct Make to run a Makefile

from the correct directory. SRC_ROOT points to the parent of the directory

in which. Next, we must set environment for the Make call and actually call

make -C SRC_ROOT install.

Please note that make install automatically invokes make build (that’s

what Standard Targets document recommends). We also call make

build in check script to not run any tests at all if our program doesn’t

compile. As I said earlier: make Make’s dependencies right to avoid

double-compilation.

Running the program

Once installed, we can add the installation directory to the PATH and

execute our program. We’ll create 2 new fixtures for this.

import shutil

from path

@pytest.fixture

def prog_env(install, monkeypatch):

destdir, prefix = install

path = os.path.join(str(destdir) + str(prefix), "bin")

monkeypatch.setenv("PATH", path, prepend=os.pathsep)

# make sure that no standard environment variable might affect our

# program in any way

monkeypatch.setenv("LANG", "C")

monkeypatch.delenv("LD_LIBRARY_PATH")

monkeypatch.delenv("LD_PRELOAD")

@pytest.fixture

def prog(install, prog_env):

destdir, prefix = install

exe = os.path.join(str(destdir) + str(prefix), "bin", "prog")

assert exe == shutil.which("prog")

return sh.Command("prog")

Here prog fixture is self-testing. It makes sure that install fixture

worked and prog_env fixture correctly set a PATH variable so a call to

prog will result in selecting the current version of our program. If it

didn’t do that, there would be a possibility to use prog installed on the

system (because we are also users of our programs, right?).

prog fixture returns sh.Command, which is a callable

subprocess wrapper. With it at hand we are ready to run our program in the

first test. Let’s add one in test/test_prog.py file and check if it printed

what we wanted.

def test_prog(prog):

ret = prog("arg1", "arg2", "--switch", "switch arg")

lines = prog.stdout.decode().splitlines()

assert lines == ["Hello, world!"]

We don’t have to check the return code. With sh library we’re sure that it is

0, because otherwise the library raises an exception. We can control allowed

return codes with a special _ok_code argument, like prog(_ok_code=[1,

2]). It is useful in no-ok scenarios.

Parametrized tests

In many cases we want to test only small differences in the results of invocations with different arguments. It would be overly verbose to add a fully fledged test function like the one above. Instead, we could create a single parametrized test.

import pytest

@pytest.mark.parametrize(

"arg_value,expected_output",

[

("foo", ["foo accepted"]),

("bar", ["bar almost accepted, try harder next time"]),

],

)

def test_parametrized(prog, arg_value, expected_output):

ret = prog("arg1", "arg2", "--switch", arg_value)

lines = prog.stdout.decode().splitlines()

assert lines == expected_output

Here out parametrized test accepts 2 additional arguments, which are

automatically populated by Pytest: arg_value and expected_output. Pytest

creates 2 test cases:

- with

arg_value="foo"andexpected_output=["foo accepted"] - with

arg_value="bar"andexpected_output=["bar almost accepted, try harder next time"]

As a rule of thumb, I parametrize only similar use cases. If test body would require writing a complex logic to handle different parameters, then I simply split such cases into their own tests. One example is writing separate parametrized tests for OK and NO-OK cases. Instead of writing something like:

if expected_success:

assert lines == expected_output

else:

with pytest.raises(...)

I just write a completely new test:

import pytest

@pytest.mark.parametrize(

"arg_value",

[ "unaccepted value", "other unacceptable" ],

)

def test_parametrized(prog, arg_value):

with pytest.raises(sh.ErrorReturnCode):

prog("arg1", "arg2", "--switch", arg_value)

Testing failures

One way to test for program failure was presented above. sh library throws

an exception when program returns any non-zero status and we can test it with

with pytest.raises() context manager.

Testing with pytest.raises() can feel limited though. For example, we’re

unable to access program’s stdout and stderr this way. Alternatively we can

tell sh library that some non-zero statuses are expected and that it

shouldn’t treat them as errors. We do this by passing a special parameter

named _ok_code:

def test_error(prog):

ret = prog("arg1", "arg2", _ok_code=[1])

err_lines = ret.stderr.decode().splitlines()

assert err_lines == ["command failed"]

Mocking program calls

Sometimes our programs must run other programs and it would be useful to programmatically inspect how were they called and control what they return. Inspired by mocking and monkeypatching concepts, I devised a clever little script to help me with that.

The program is called monkeyprog, but it should be installed inside a

monkeypatched PATH under the name of a program which it fakes. For example,

if it was to fake grep, it should be installed as grep.

Monkeyprog has 2 main features:

- is reads special environment variables which hold JSON arrays defining its response (list of output lines and return code) for particular input

- it saves all calls inside a JSON file which test environment can read and inspect

For example, if we wanted to react to a particular call as a grep, we’d set

MONKEYPROG_GREP_RESPONSES to [[".* -e query file", ["query line1", "query

line2"]]]. Similarily we could set MONKEYPROG_GREP_RETURNCODES to control

return status of grep’s mock. It would keep all calls inside rep-calls.json

file insite the same directory it resides in.

First, we should copy monkeyprog to test/bin directory. To use it, we

must call provided monkeyprog factory fixture which installs monkeyprog

under the given name and sets PATH for the rest of the test case. We can

choose to install monkeyprog by creating a symbolic link or by copying the

file (which is useful if we need to test changing permissions, which, on some

platforms, notably on Linux, would otherwise follow the symlink and change

the original file).

Next, we set up our mocked program by calling on_request() one or more

times, followed by monkeypatch(). We inspect the calls by checking calls

property. Easy.

def test_search_grep_call(prog, monkeyprogram):

grep = monkeyprogram("grep", add_to_path=True, symlink=False)

somefile = "file.txt"

grep.on_request(".* -e query1", f"{somefile}:11:a query1")

grep.on_request(".* -e query2", returncode=1)

grep.monkeypatch()

prog("arg1")

assert grep.called

assert len(grep.calls) == 2

assert grep.calls[0]["args"] == ["grep", "-l", "-e", "query1", somefile]

assert grep.calls[1]["args"] == ["grep", "-l", "-e", "query2", somefile]

Debugging

--pdb- inspecting prog’s stdout and stderr

-

By default temporary directories are located in

/tmp, which is often mounted in RAM. ↩ -

If you insist on not using Make, just add these commands to the

checkscript. You have to implement dependency management yourself though. Hint:man testand search forFILE1 -nt FILE2. ↩ -

You might want to add it to

.gitignore↩ -

This applies only to fixtures. Ordinary helper functions must be imported first. ↩